Abstract

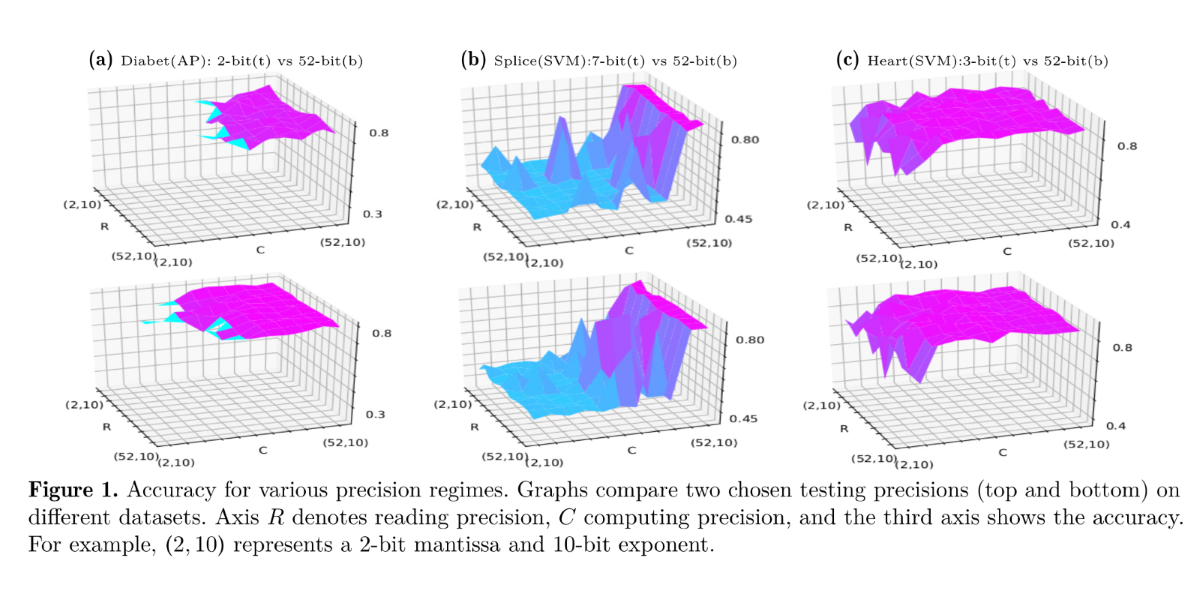

Perceptron and Support Vector Machine (SVM) algorithms are two well-known and widely used linear predictors. They compute a hypothesis function using supervised learning to predict labels of unknown future samples. Both training and testing procedures are typically implemented using double precision floating-points to minimize the error, which often results in overly conservative implementations that waste runtime and/or energy. In this work, we empirically analyze the impact of floating-point precision on these predictors. We assess whether the precision of reading the dataset, training, or testing is the most critical for the overall accuracy. Our analysis in particular focuses on very small floating-point bit-widths (i.e., only several bits of precision), and compares these against the well-known and widely used single and double precision types.

Citation

Rocco Salvia,

Zvonimir Rakamaric

Exploring Floating-Point Trade-Offs in ML

Informal Proceedings of the Workshop on Approximate Computing Across the Stack (WAX), 2018.

BibTeX

@inproceedings{2018_wax_sr,

title = {Exploring Floating-Point Trade-Offs in ML},

author = {Rocco Salvia and Zvonimir Rakamaric},

booktitle = {Informal Proceedings of the Workshop on Approximate Computing Across the Stack (WAX)},

note = {Position paper},

year = {2018}

}

Acknowledgements

We thank Annie Cherkaev and Vivek Srikumar for insightful discussions. This work was supported in part by NSF CCF 1552975.